The Brazilian data protection regulator (ANPD) has prohibited Meta from using Brazilian personal data to train its artificial intelligence models, noting the potential for “serious damage and difficulties for users at large.” The decision comes after Meta updated its privacy policy in May, allowing the social media giant to use Brazilian public Facebook, Messenger, and Instagram data (including posts, photos, and captions) for artificial intelligence training.

The decision is in response to a Human Rights Watch research from last month that discovered that Brazilian children’s identifiable and personal images are included in LAION-5B, one of the biggest image-caption datasets used to train AI models, putting them at risk of deepfakes and other forms of exploitation.

According to ANPD, the policy poses an “imminent risk of serious and irreparable or difficult-to-repair damage to the fundamental rights” of Brazilian users, as reported by The Associated Press in the nation’s official gazette. According to the ANPD, there are 102 million Brazilian user accounts on Facebook alone, making this one of Meta’s biggest marketplaces. ANPD sent out a notice on Tuesday, giving Meta five working days to abide by the directive; failing to do so could result in a daily penalty of $8,808.

In a statement to the AP, Meta said that the decision is “a step backwards for innovation, competition in AI development, and further delays bringing the benefits of AI to people in Brazil” and that its revised policy “complies with privacy laws and regulations in Brazil.” Although Meta states users have the option to refuse to have their data used to train AI, ANPD claims that doing so is made more difficult by “excessive and unjustified obstacles.”

Meta had to halt its efforts to train its AI models on European Facebook and Instagram posts after facing similar resistance from EU regulators. But in the US, where user privacy regulations are not as strong, Meta has already implemented amended data-gathering procedures.

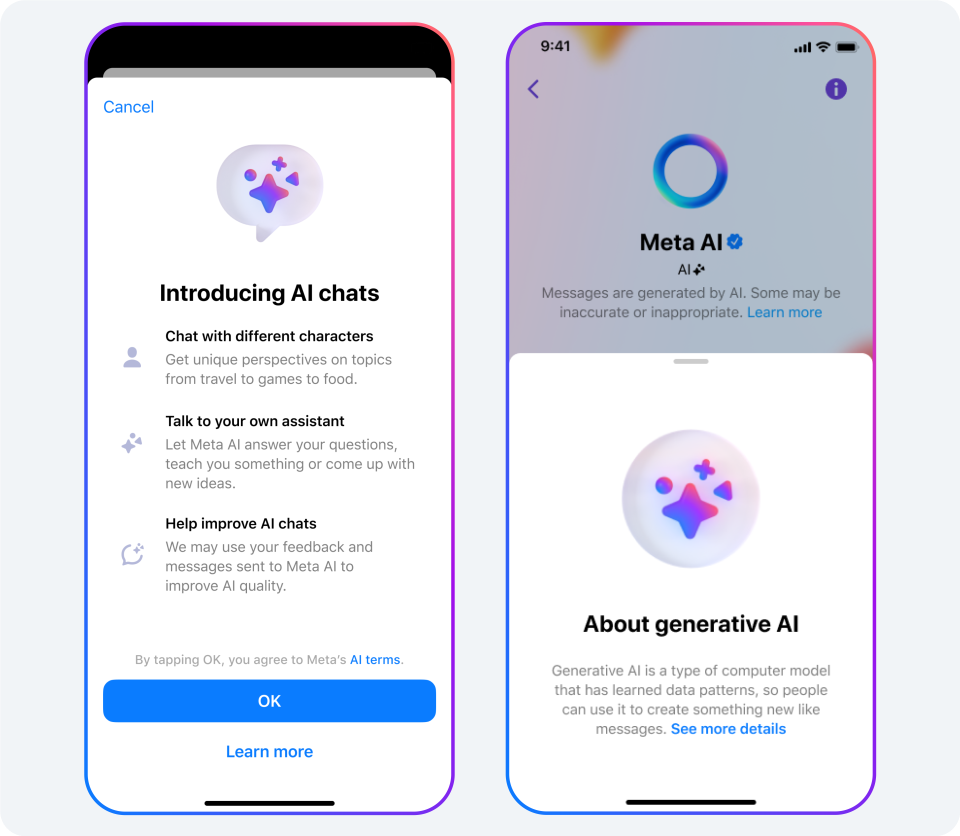

Meta’s generative AI models are powered by the data you share on its platforms. The goal of these models, which come with tools like AI Creative Tools and Meta AI, is to improve user experience. Their objectives are to enable creative expression, offer prompt solutions to challenging issues, and tackle difficult challenges. AI models are trained with the obtained data to comprehend the connections between various kinds of content. Text models, for example, are trained to produce conversational responses and anticipate language patterns. With the use of descriptive text inputs, picture models are trained to produce new images.

According to Meta’s website, Meta is using “publicly available online and licensed information to train AI at Meta, as well as the information that people have shared publicly on Meta’s products and services. This information includes things like public posts or public photos and their captions. In the future, we may also use the information people share when interacting with our generative AI features, like Meta AI, or with a business, to develop and improve our AI products.” Meta has a strong internal Privacy Review procedure in place and asserts its dedication to privacy. According to Meta, this entails assessing potential privacy concerns and putting mitigation measures in place. Their operations are guided by five fundamental principles, which are privacy and security, governance and accountability, robustness and safety, fairness and inclusiveness, and transparency and control.

Finally, is everyone subjected to the AI training from Meta? In practice, not everyone’s information may be used for AI training. Greater data protection rules apply to residents of the United Kingdom (UK), all EU member states, and the European Economic Area (EEA).

Discover more from TechBooky

Subscribe to get the latest posts sent to your email.