Although Google has long prohibited explicit advertisements, the company has not prohibited advertisers from endorsing platforms that allow users to create deepfake porn and other produced nudes. That is going to change shortly.

Google defines “sexually explicit content” as “text, image, audio, or video of graphic sexual acts intended to arouse,” and as of right now, the company forbids ads from promoting it. The advertisement of services that assist users in producing that kind of content—whether by creating fresh content or changing someone’s image—is now prohibited under the new policy.

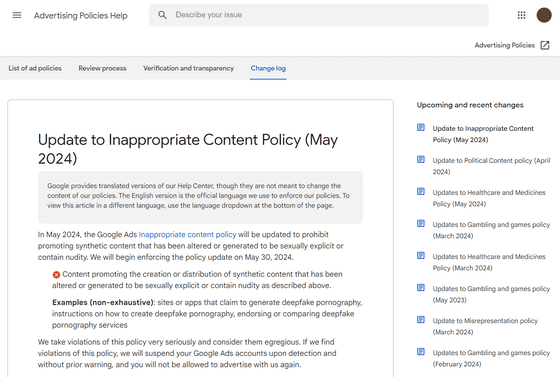

The new law forbids “promoting synthetic content that has been altered or generated to be sexually explicit or contain nudity,” including websites and applications that teach users how to make deepfake porn. It will take effect on May 30.

A Google representative, Michael Aciman, stated that “this update is to explicitly prohibit advertisements for services that offer to create deepfake pornography or synthetic nude content.” Ads that don’t follow the standards will be taken down, the company says, adding that both automated and human reviews are used to make sure the rules are followed. The company’s annual Ads Safety Report states that in 2023, Google eliminated over 1.8 billion advertisements for breaking its regulations regarding sexual material.

According to 404 Media -which first released the report, states that although Google has long forbidden advertisers from endorsing sexually explicit material, some applications that help create deepfake pornography have managed to get around this ban by portraying themselves as non-sexual on Google Play Store or Google Ads. For instance, one face-swapping program advertised itself on porn websites rather than in the Google Play store as being sexually explicit.

In recent years, nonconsensual deepfake pornography has grown to be a persistent issue. After reportedly producing AI-generated nude pictures of their peers, two middle school students in Florida were placed under arrest in December of last year. A 57-year-old Pittsburgh man was just given a sentence of more than 14 years in jail for having deepfake evidence of child sexual abuse. The FBI warned of an “uptick” in extortion attempts involving the use of AI-generated nude photos as a form of blackmail last year. Certain platforms allow users to create sexual content, but many AI models make it difficult, if not impossible, for users to create AI-generated nudes.

Legislative action against deepfake pornography might occur shortly. The DEFIANCE Act, which was introduced by the House and Senate last month, would provide a legal mechanism that would allow victims of “digital forgery” to bring lawsuits against anyone who creates or disseminates deepfakes of them without their consent.

Discover more from TechBooky

Subscribe to get the latest posts sent to your email.